Reinforcement learning is the often forgotten sibling of supervised and unsupervised machine learning. But now, with its important role in generative AI learning, I wanted to touch on the subject with a fun introductory example. Fun projects like these can always teach you a lot.

I was inspired by YouTuber Nick Renotte (video: https://www.youtube.com/watch?v=Mut_u40Sqz4) and retried his Project 2 from the two-year-old tutorial. In this tutorial, we teach a model to race a car.

F1 drivers learn from experience, and so does a reinforcement learning agent. Let’s look at teaching such an agent to race through training and making it learn from repeated experience.

A quick recap of Reinforcement Learning (RL)

The docs of RL library, called “Stable Baselines3”, states the following,

“Reinforcement Learning differs from other machine learning methods in several ways. The data used to train the agent is collected through interactions with the environment by the agent itself (compared to supervised learning where you have a fixed dataset for instance).”

https://stable-baselines3.readthedocs.io/en/master/guide/rl_tips.html

So in RL, there is the concept of an agent/model interacting with its surroundings and learning through rewards. Actions that lead to achieving the goal are rewarded and so are reinforced as being “good” actions.

For the environment, i.e. our race track, we can use the already created, “CarRacing” environment from the “Gymnasium” project – the actively developed fork of OpenAI’s project called “Gym”. Gymnasium helps to get started quickly with reinforcement learning with many pre-made environments.

In CarRacing, the reward is negative (-0.1) for every frame and positive (+1000/N) for every track tile visited, where N is the total number of tiles visited in the track. Hence, the car is encouraged to move as quickly as possible and visit new parts of the track. The negative reward penalises staying still as the agent is encouraged to get to the finish asap. The positive reward encourages the car to visit new parts of the track as quickly as possible.

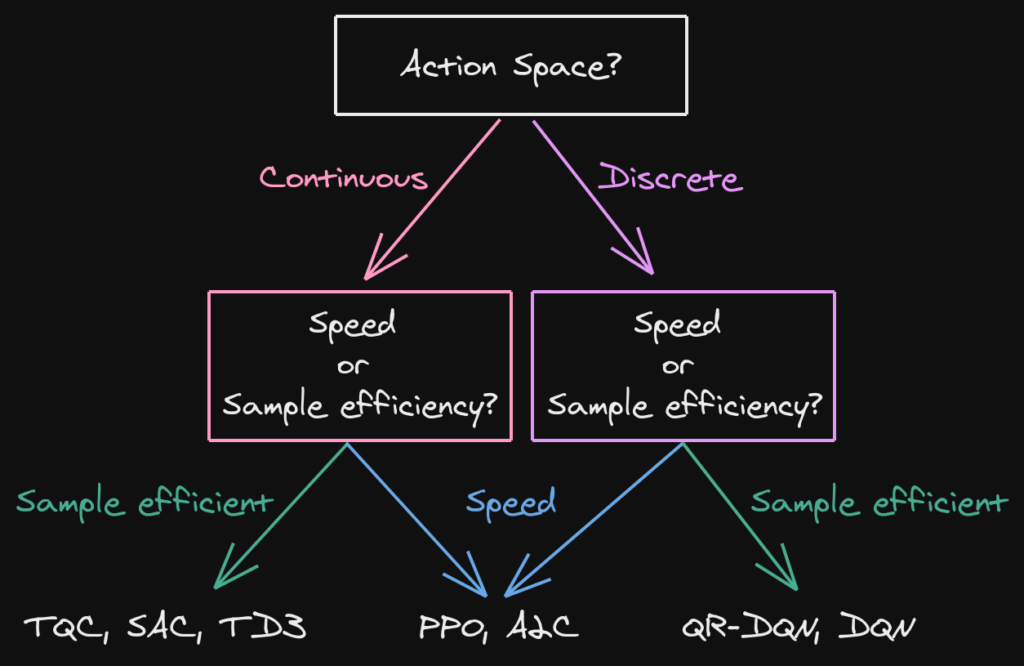

For our agent, I will use the PPO algorithm from the Stable Baselines3 library which comes with a general but good choice of hyperparameters for its models.

PPO: Proximal Policy Optimization

PPO is an algorithm for training RL agents. It has been used before in the fine-tuning of large language models via Reinforcement learning from human feedback (RLHF: https://en.wikipedia.org/wiki/Reinforcement_learning_from_human_feedback).

In PPO, the agent selects random actions which get less random over time as it learns. PPO is conservative in its learning process and makes sure not to change its policy (essentially its brain) by changing too much in each update. Constraining updates in this way leads to consistent performance, stability and speedy learning. However, this may also cause the policy to get stuck in a sub-optimal method for success. PPO seems to work well for this task in a short amount of time without requiring huge memory resources. It’s great to get started.

Read more about it: https://spinningup.openai.com/en/latest/algorithms/ppo.html

Whereas Nick used the stable baslines3 library directly, I will use Stable Baseline’s “RL zoo” – a framework for using Stable Baselines3 in an arguably quicker and more efficient way – all on the command line. RL Zoo docs: https://stable-baselines3.readthedocs.io/en/master/guide/rl_zoo.html

Training with RL Zoo – Reinforcement boogaloo

I found an easy way to get training our PPO agent (see: https://github.com/DLR-RM/rl-baselines3-zoo). I followed the installation from source (this requires git). I also recommend you create a virtual environment for all the dependencies.

git clone https://github.com/DLR-RM/rl-baselines3-zooYour system is probably different from mine and my commands for the command line are aimed at Linux/MacOS so you may need to translate some commands for your system.

Really make sure to have all the dependencies!

pip install -r requirements.txt

pip install -e .Now let’s start training. Specify the algorithm (PPO), the environment (CarRacing-v2) and the number of time-steps (try different numbers!). For example you can try 500000 time steps.

python -m rl_zoo3.train --algo ppo --env CarRacing-v2 --n-timesteps 500000

This may take a few minutes. If you’re on Linux and get error: legacy-install-failure you may also need to install a few more packages.

sudo dnf install python3-devel<br>sudo dnf install swig<br>sudo dnf groupinstall "Development Tools"Once training is complete, you can visualise the learning process by plotting the reward over time/training episodes.

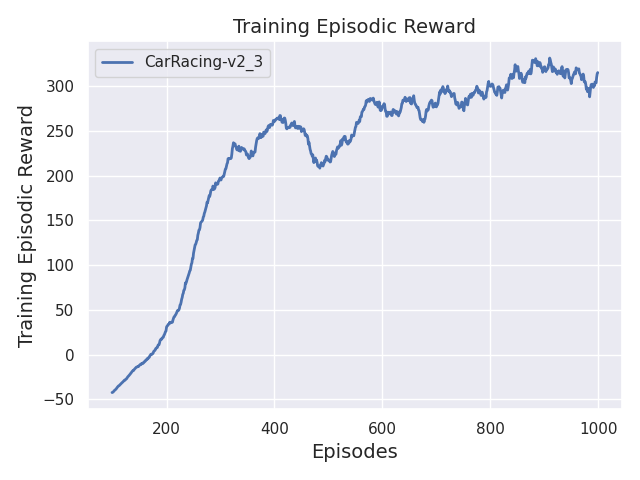

python scripts/plot_train.py -a ppo -e CarRacing-v2 -y reward -f logs/ -x steps

Increasing rewards with episodes indicates that the agent is learning.

Record a video of your latest agent with

python -m rl_zoo3.record_video --algo ppo --env CarRacing-v2 -n 1000 --folder logs/I tested the agent after 4,000,000 time steps of training (~2hr for me) and got this episode below – what a good run!

RL zoo allows you to continue training if you want to improve it.

python train.py --algo ppo --env CarRacing-v2 -i logs/ppo/CarRacing-v2_1/CarRacing-v2.zip -n 50000Thanks for reading!

Great insights! It’s fascinating how Sprunki Incredibox builds on Incredibox’s foundation with such depth and creativity, making music composition feel both intuitive and powerful.

Sprunki Incredibox is a fresh twist on a beloved game-new sounds and visuals really elevate the creative fun. If you’re into music and puzzles, check out Puzzle Games for more innovative picks.

Great breakdown! Strategic thinking in poker mirrors smart moves in games like those on Jili777. Knowing when to fold or go all-in is key, just like picking the right slot at the right time.

Lovart AI sounds like a game-changer for designers looking to blend creativity with AI efficiency. Its tri-modal interaction could really streamline workflows, especially with Figma and Photoshop integration. Worth keeping an eye on. Lovart AI

Blackjack strategy can feel overwhelming at first, but breaking it down step-by-step really helps! Seeing platforms like 68win login prioritize a smooth user experience – even with registration – is a great sign for new players too. It’s all about accessibility!

Keno’s probability is fascinating, isn’t it? Seeing patterns emerge is key! Platforms like jboss are making gaming more accessible, though responsible play is always first. Enjoy the thrill, but set limits!

That’s a great point about responsible gaming! Platforms like jl boss app casino are making access easier, so mindful play is key. I’ve heard their slot options are pretty engaging too! 👍

Interesting points about balancing risk & reward! Seeing platforms like 68wim online casino cater to Vietnamese players with easy registration & local payment options is smart. Accessibility is key for growth!

It’s smart to prioritize secure online platforms – verification is key these days! Seeing innovations like streamlined banking with GCash (via jl boss games) is reassuring. Responsible gaming & solid security go hand-in-hand, don’t you think?

Interesting article! Thinking about games of strategy really highlights how much planning goes into both winning and responsible play. Speaking of strategy, have you checked out the ph987 app? It’s designed for players who like a calculated approach! It’s cool to see platforms emphasizing secure, thoughtful gaming experiences.

Interesting points about responsible gaming! Platforms like jljl77 app games are gaining traction in the Philippines, focusing on both entertainment and secure transactions – a good sign for players seeking value & trust.

Interesting points about adapting to table dynamics! It reminds me of the secure, player-focused experience at phswerte casino – they really emphasize trust & a smooth journey, like their verification process. Solid analysis!

Dice games are all about understanding probability – even simple ones! It’s cool to see platforms like 777pinas game offering diverse options, but always remember responsible gaming & knowing your odds! A verified account is key, too. 😉

Solid analysis! Seeing more Philippine-focused iGaming platforms like jljl77 2025 game is great – convenient PHP options & local support are key. Early access & security seem prioritized, which is smart for building trust.

Interesting analysis! The focus on local payment options like GCash & PayMaya at ss777 apk is smart for the Filipino market. A playful interface, like a ‘game zoo’, can really boost engagement too!

It’s so important to remember gambling should be fun, not a source of stress. Seeing platforms like spintime online casino offer data insights is interesting – transparency can help players stay informed & in control. Let’s all gamble responsibly! 😊

I enjoyed reading this article. Thanks for sharing your insights.

Scratch cards always feel like a little burst of fun, don’t they? Reminds me of exploring new online platforms like jlboss login – so many games to try! Convenient apps like that are a game-changer for quick entertainment. Definitely checking it out! ✨

Smart bankroll management is key with any online gaming, and understanding the odds is crucial. Seeing platforms like JL Boss offer convenient access via the jlboss app is interesting, but always gamble responsibly! It’s about entertainment, not just winning.

Interesting take on maximizing returns! Thinking long-term strategy is key, much like finding a reliable platform. I’ve been checking out nustargame slot options – seems legit with a classic feel. Good content!

It’s so important to remember gambling should be fun, not a source of stress. Seeing platforms like nustargame app focus on classic games with modern security is a good sign. Balance is key, folks! Let’s all play responsibly. ✨

Keno’s surprisingly complex math always fascinates me! Seeing platforms like wk777 login app casino offer diverse games, including lottery options, is great. Quick registration & PHP support are a big plus for players here! 👍

That’s a great point about accessibility in shooting games! Seeing platforms like wk777 login game prioritize quick registration (under 2 mins!) & local payment options like GCash is smart for wider appeal. It’s all about lowering the barrier to entry, right? 🤔

Fascinating to see how gaming evolved in the Philippines! Platforms like arina plus games are really adapting to local preferences with options like GCash & Tagalog support – smart move for responsible play! It’s a long way from cockfighting…

Interesting analysis! Seeing more platforms like arina plus download catering to the PH market with localized options like GCash is great. Responsible gaming features are key too – a smart approach! 👍

It’s fascinating how gaming platforms now blend tradition with tech – like preserving cultural roots while innovating! Seeing that reflected in Voslot777’s approach, and easy access via voslot777 club, is really interesting. It’s about more than just games, it’s heritage!

Interesting read! It’s cool to see platforms like voslot777 app honoring the history of Philippine gaming while embracing modern tech. Preserving that cultural element is key, alongside convenience! 👍

Been messing around on 57 win lately. It’s pretty straightforward and easy to navigate. Not the flashiest, but reliable. Worth a look if you’re bored. 57 win

Wanna *tải game b29*? This place is the real deal. Quick download, straight to the action. No messing around. Get the game here: tải game b29

7m ma cao information is key for my sports strategy. This site provides essential data. It really steps up ones game 7m ma cao.

Just tried iijogo, not bad at all! Easy to navigate and the games are pretty fun. Definitely worth checking out. iijogo

Bj88dangnhap, ai có link đăng nhập Bj88 mới nhất không cho mình xin với. Sao bữa giờ vào toàn bị lỗi không à. Chơi bên này cũng được mà dạo này hơi chập chờn. bj88dangnhap

Tìm link vào W88 mệt mỏi ghê. Linkw88fan.com này có vẻ ổn áp nè. Để lưu lại phòng khi link cũ bị die. Anh em nào xài rồi cho xin review với nha. link vao w88 linkw88fan.com